Immersive User Experience (UX) and Augmented Reality (AR)

Objective:

This blog post aims to explore the integration of augmented reality (AR) technology using Zapworks and Unity WebGL. It will delve into how AR functions, the advantages it holds over virtual reality (VR), the different types of AR, important design considerations, and real-world applications of AR across various industries.

What is Augmented Reality and How Does it Work?

Augmented reality (AR) is a technology that overlays digital content—such as images, animations, or information—onto the real world through devices like smartphones, tablets, or AR glasses. It works by using a camera to capture the physical environment and display computer-generated elements on top of it. This creates an immersive experience where users can interact with virtual objects in their actual surroundings.

AR can be powered by different technologies, such as computer vision, simultaneous localization and mapping (SLAM), and depth sensing. These systems help detect objects and surfaces, recognize patterns, and understand spatial environments to align digital elements with the real world.

AR vs. VR: Accessibility and User Reach:

Unlike virtual reality (VR), which immerses users in a fully digital environment and typically requires specialized headsets, augmented reality is much more accessible. AR only requires mobile devices, making it easier for users to experience without the need for extra hardware. This wide accessibility is one of the reasons AR is becoming increasingly popular, as people can simply use their smartphones to engage with AR experiences.

Types of AR:

There are several types of AR, each offering different levels of interaction and immersion:

1.Marker-Based AR:

Relies on visual markers (like QR codes or images) that trigger the appearance of digital content when viewed through a device’s camera.

2.Markerless AR (Location-Based AR):

Uses GPS, accelerometers, and other sensors to position digital objects within a real-world environment. Pokémon GO is a well-known example.

3.Projection-Based AR:

Projects digital content onto physical surfaces without the need for a screen, allowing users to interact with the projection.

4.Superimposition-Based AR:

Overlays digital objects on top of real-world objects to replace or enhance their appearance. This is commonly used in healthcare, for example, in surgeries.

What to Consider When Designing for AR:

When designing for AR, it’s essential to consider both the digital and physical environments and how users will interact with them. Key factors include:

- Contextual Integration: AR elements should align seamlessly with the real-world environment, avoiding distraction or distortion.

- User Experience: Since AR experiences are often viewed through handheld devices, ease of use and intuitive interactions are crucial. Ensure navigation and controls are simple and accessible.

- Performance: The application must run smoothly on mobile devices without lagging or overheating. This means optimizing 3D models and avoiding heavy processing loads.

- Safety and Environment: Users interact with AR in real-world settings, so designs must account for their surroundings, ensuring that virtual elements don’t block critical views or lead to accidents.

- Engagement: AR experiences should be immersive but not overwhelming. Provide meaningful interactivity to engage users without creating confusion.

Real-World Usage of Augmented Reality

AR is increasingly being adopted across industries to enhance user experiences and streamline operations. Here are a few examples:

- Retail: Brands like IKEA use AR apps to let customers visualize how furniture will look in their homes before purchasing.

- Education: AR is used in educational tools to provide interactive learning experiences, such as visualizing complex subjects like anatomy or astronomy.

- Healthcare: Surgeons use AR to project patient data or guide operations, enhancing precision and reducing risks.

- Tourism: AR apps can provide immersive historical tours, overlaying information and visuals at tourist sites to enrich the visitor experience.

- Marketing: AR filters and virtual try-on features for products like makeup or accessories are widely used in social media platforms and e-commerce.

Figure 1: The official trailer of Pokémon GO highlights the game’s key features in its Legendary Trailer (GameSpot, 2017).

Pokémon GO and Real-World Integration

Pokémon GO is one of the most popular examples of augmented reality (AR) successfully integrating with the real world. Released in 2016, the game uses AR to bring virtual Pokémon creatures into players’ physical environments. By leveraging a smartphone’s GPS and camera, Pokémon GO allows players to see and capture Pokémon that appear in real-world locations through their phone screens. This creates an immersive experience where the digital and physical worlds blend seamlessly.

The game’s real-world integration is built on location-based AR, meaning it uses the player’s real-time geographic location to position virtual Pokémon at nearby places. Players can visit various landmarks, known as “Pokéstops” and “Gyms,” to collect items, battle other players, and participate in special events. This encourages players to explore their surroundings, visit new locations, and engage with the world around them in a unique way.

Pokémon GO’s real-world integration was a game-changer for mobile gaming, demonstrating how AR could be used to connect virtual activities with physical environments. The game’s success has inspired many other location-based AR applications, particularly in fields like tourism, fitness, and urban exploration.

Figure 2: Another example of augmented reality is its integration in enhancing online brand experiences, where AR helps create immersive and interactive customer engagement.

Augmented Reality in the Beauty Industry

The beauty industry has quickly embraced augmented reality as a tool to enhance the customer experience, particularly through virtual try-on technology. AR allows customers to experiment with different beauty products, such as makeup or hair colors, without physically applying them. This helps customers make more informed decisions about purchases, all from the convenience of their mobile devices.

A prime example of AR in the beauty industry is L’Oréal’s ModiFace. ModiFace is an AR platform that enables users to try on virtual makeup, including lipstick, foundation, eyeshadow, and more, in real-time using their smartphones. The app uses facial recognition and AR technology to map a user’s face and accurately apply digital makeup to match their features. This virtual try-on experience is available both online and in-store, allowing customers to see how products will look on their skin tone and facial structure before committing to a purchase.

Augmented Reality Using Zapworks and Unity (Lab Exercise)

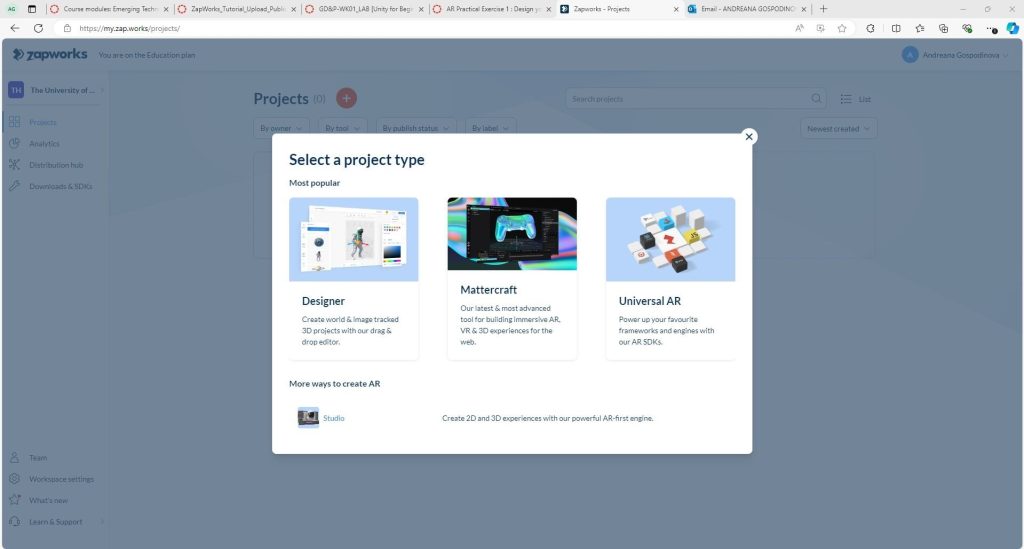

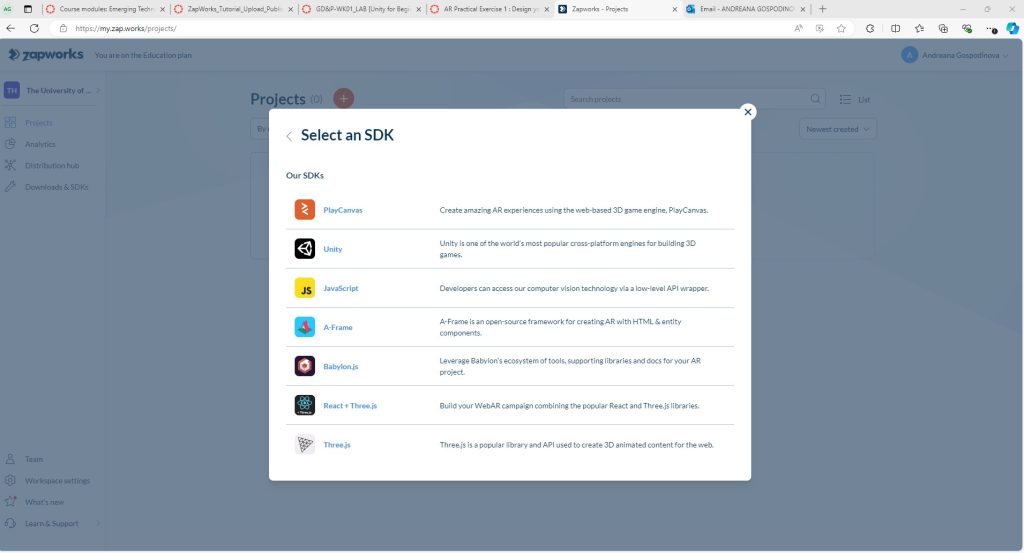

As part of this lab exercise, we were tasked with creating a marker-based AR image using Zapworks and Unity. This involved understanding the fundamentals of AR and how marker-based tracking works in these platforms. Below is an overview of Zapworks and how its image marker tracking functions.

What is Zapworks?

Zapworks is a platform developed by Zappar that allows users to create, publish, and share augmented reality (AR) experiences. It provides a user-friendly toolkit for designing interactive AR content that can be viewed through the Zappar app or embedded into websites and apps via WebAR (web-based AR). Zapworks offers different tools for developers and designers, such as Zapworks Studio, Widgets, and Universal AR SDKs, enabling a wide range of AR creation options from basic interactive experiences to more advanced, highly customized AR projects.

One of the key features of Zapworks is its support for marker-based AR, which allows digital content to be triggered when a camera recognizes a specific image or marker. This capability makes Zapworks a popular choice for creating AR experiences that can be accessed easily by scanning printed materials, product packaging, or posters.

How Does Zapworks Image Marker Tracking Work?

In marker-based AR, image recognition plays a central role. Zapworks uses image marker tracking to recognize and track specific images in the real world, known as “markers.” These markers are pre-defined in the Zapworks environment, and the platform uses them to anchor and display AR content when a user scans the marker with their mobile device.

Here’s how the image marker tracking process works in Zapworks:

Image Upload: Users start by uploading an image or design (often called a “trigger image”) into the Zapworks platform. This image becomes the marker for the AR experience.

Marker Recognition: When a user scans the trigger image through the Zappar app or a WebAR link, the app’s camera recognizes and detects the image using computer vision algorithms.

Content Anchoring: Once the marker is detected, Zapworks overlays the digital content (such as 3D models, animations, videos, or text) onto the real-world view. The content is locked to the marker, meaning it will stay in place and move with the image as long as it remains in view of the camera.

Tracking: As the user moves their device, the app continues to track the marker’s position, ensuring that the AR content stays aligned with the marker, providing a stable and immersive experience.

AR Practical Exercise 1 – Designing an AR (Augmented Reality) Experience:

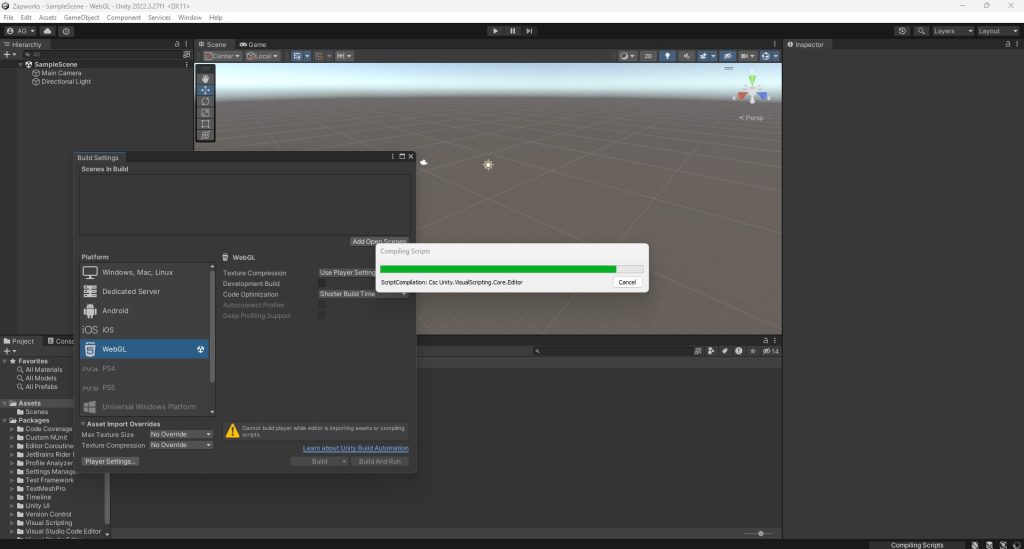

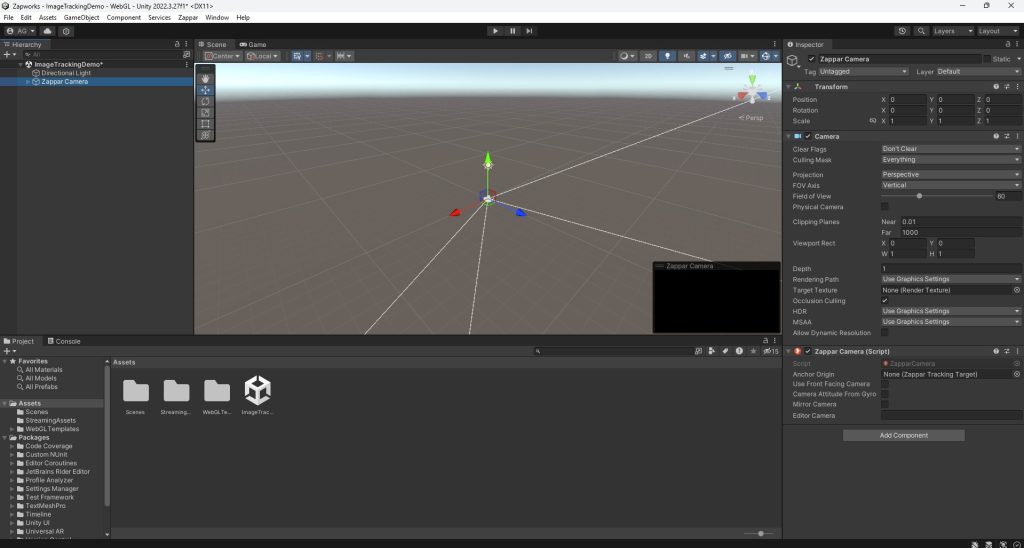

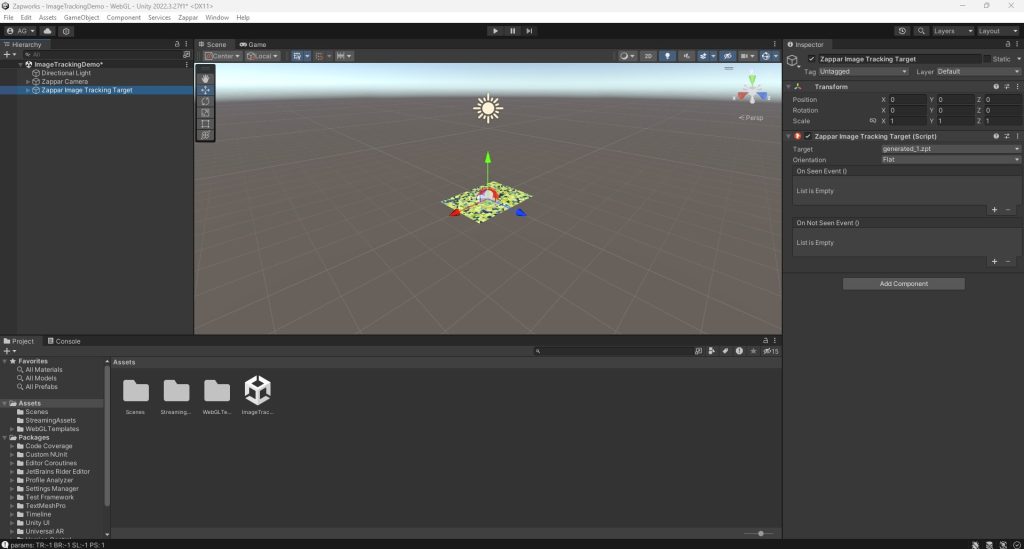

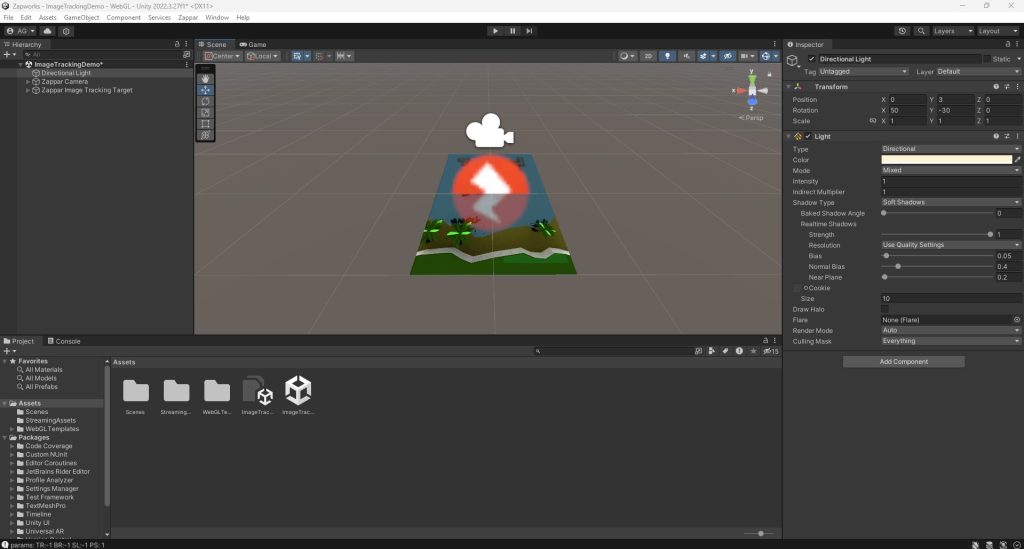

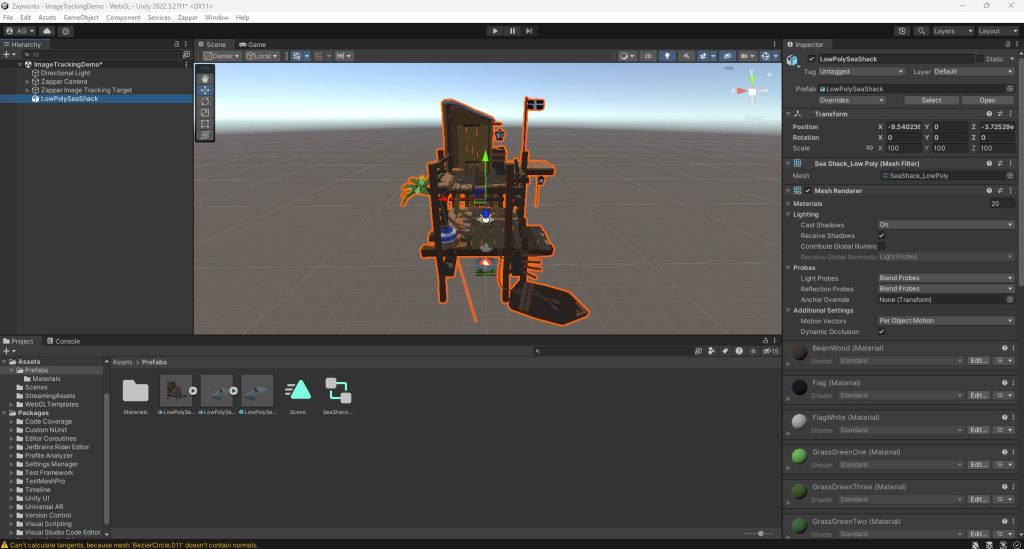

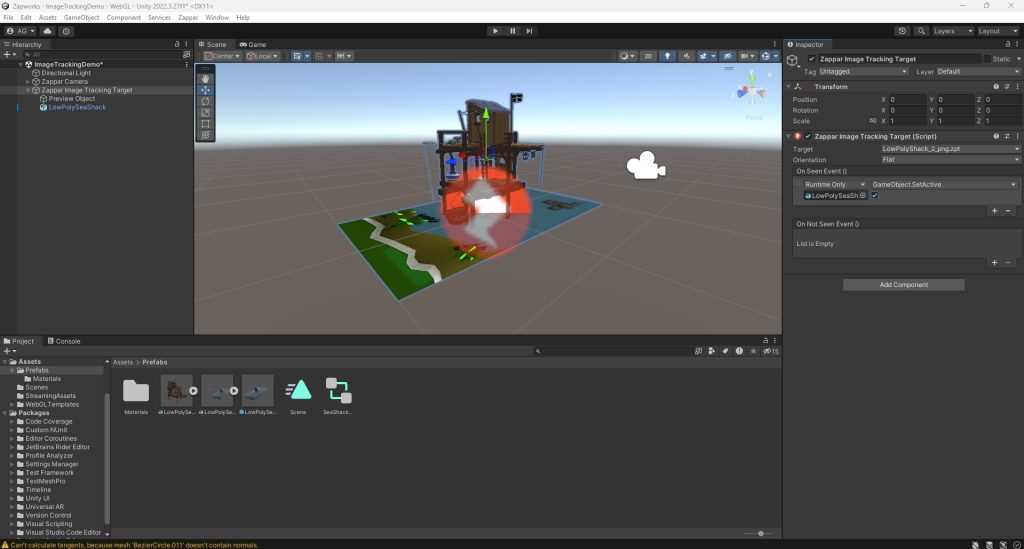

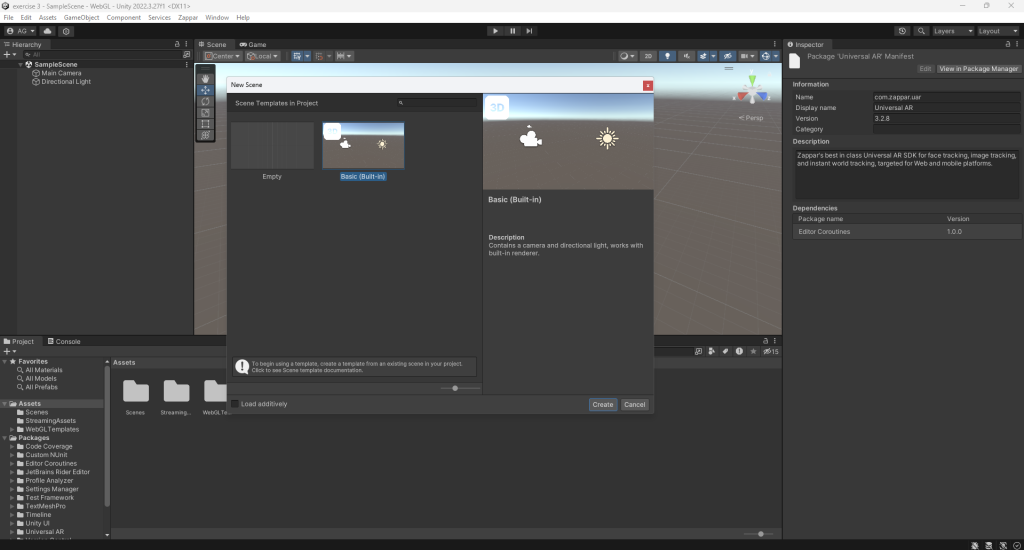

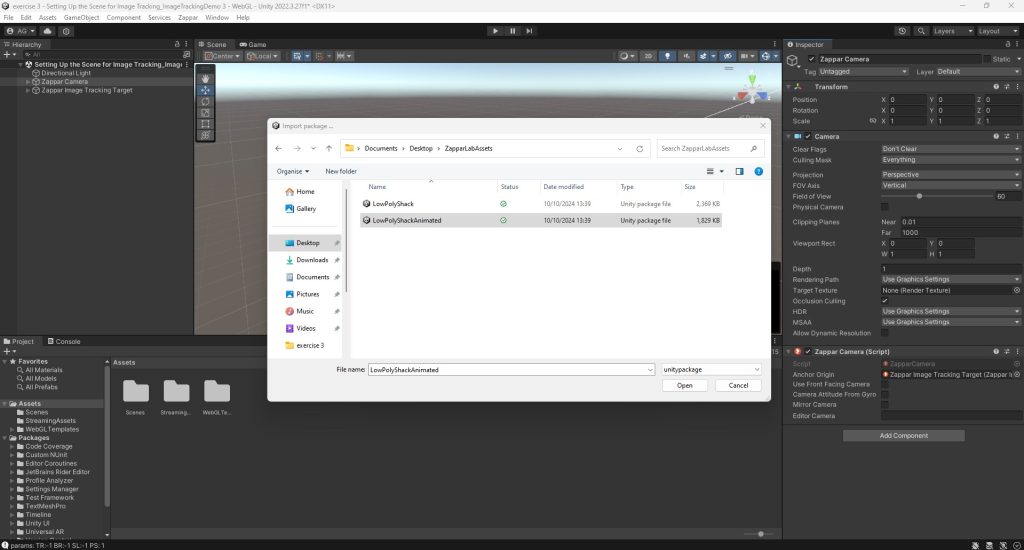

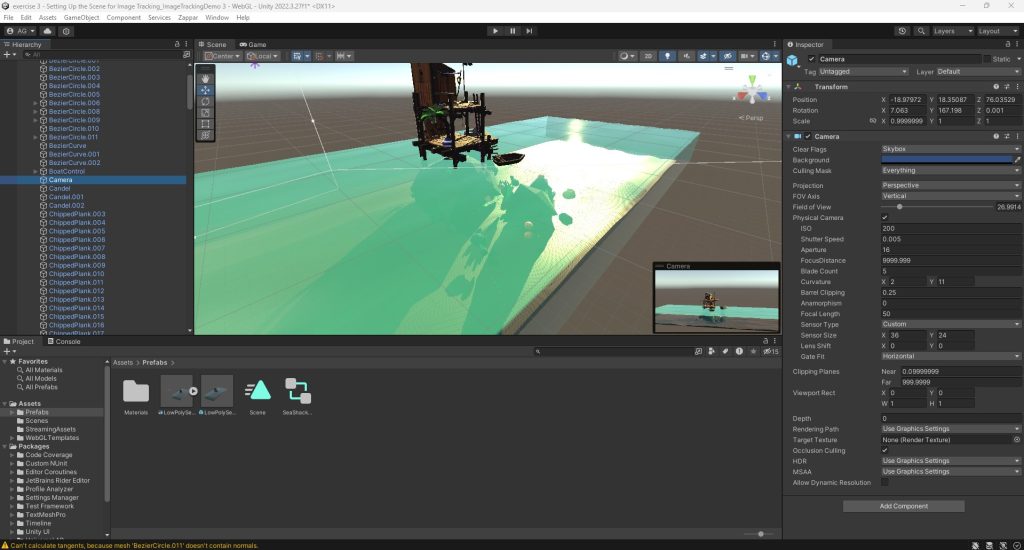

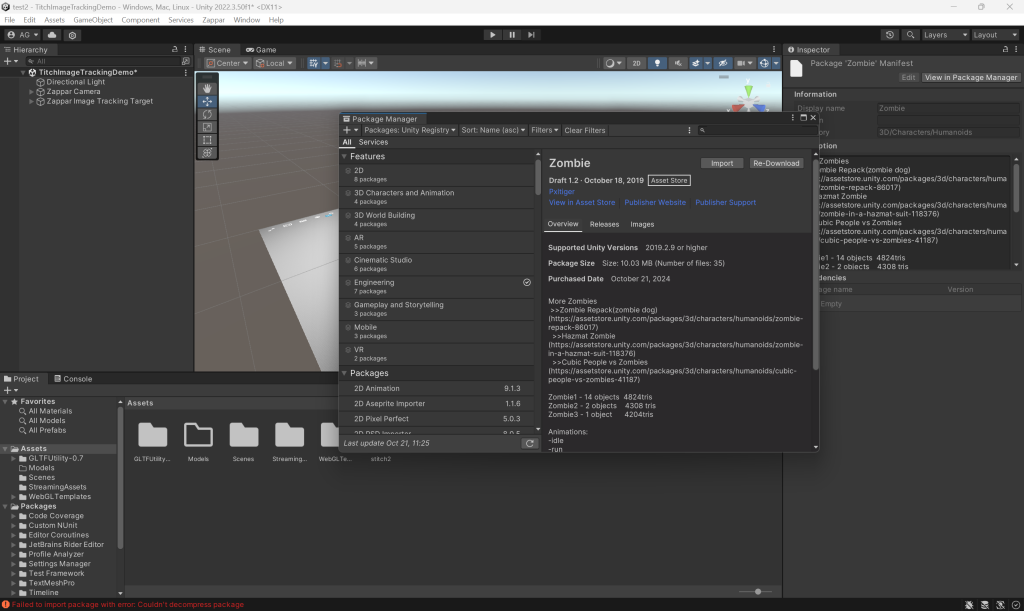

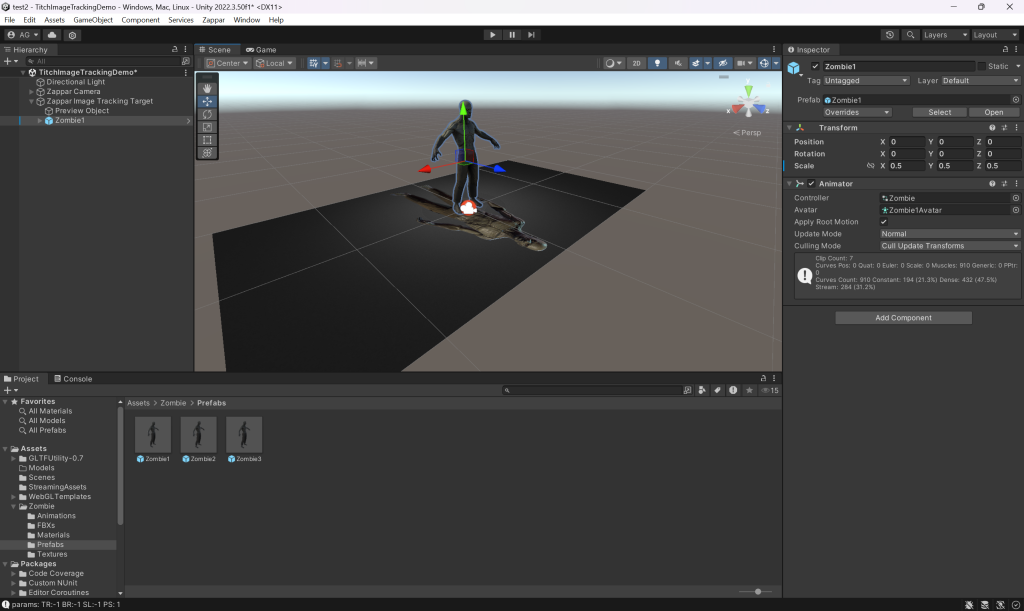

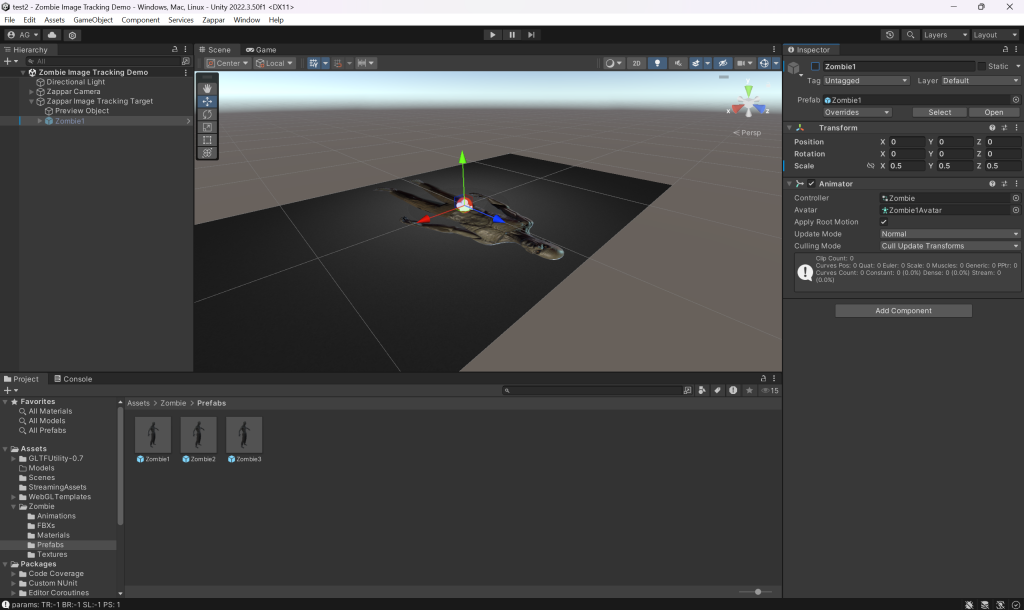

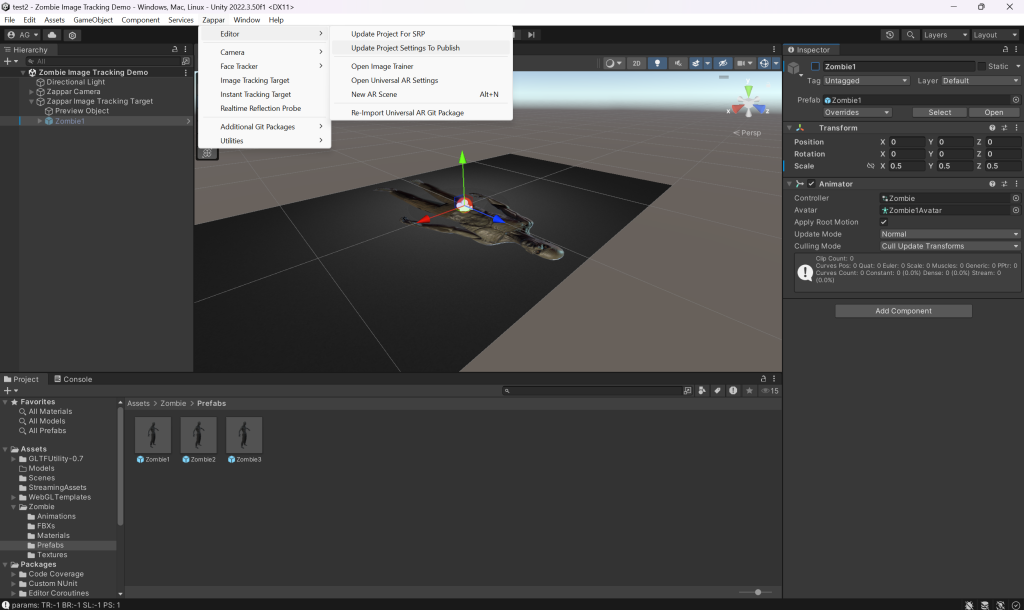

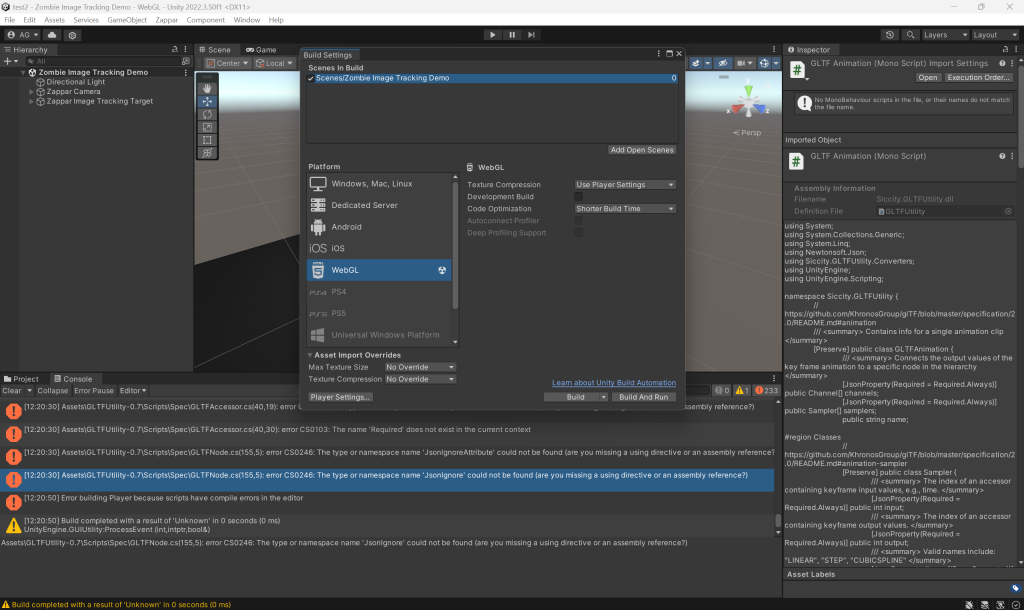

The first part of the task involved following detailed instructions to implement the concept in Unity. We used a downloadable asset pack to develop a 3D AR experience that could be activated by scanning the QR code. To bring the AR design to life, we adjusted several aspects of Unity’s interface by installing necessary plugins and packages, ensuring compatibility with Zapworks.

Below are a series of screenshots documenting the step-by-step development of the Augmented Reality workshop, illustrating key stages in the process from asset integration to final deployment.

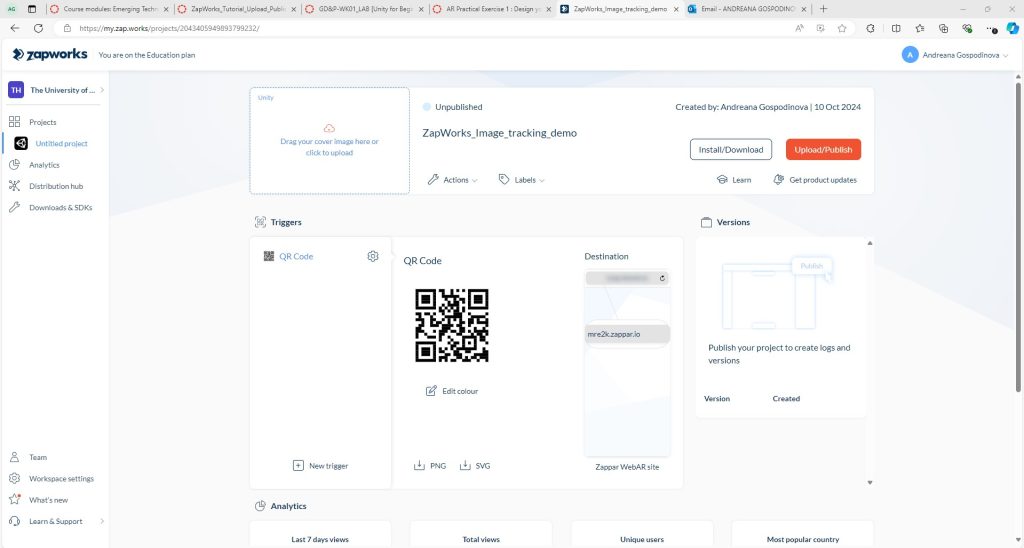

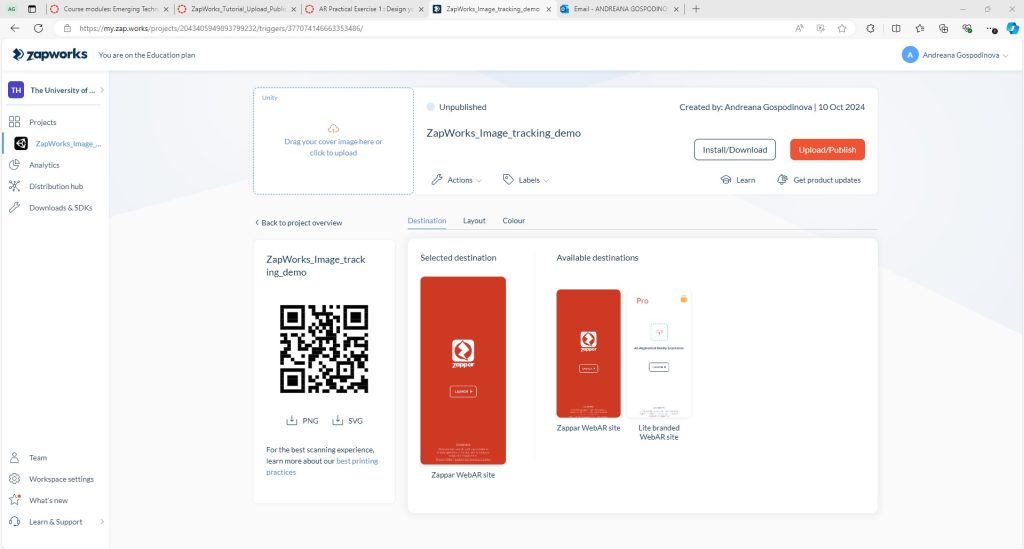

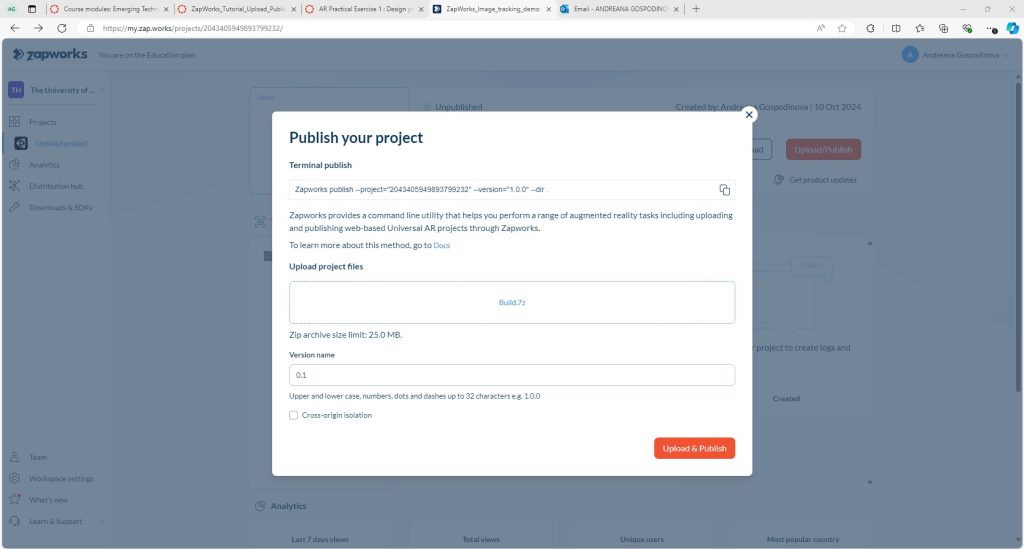

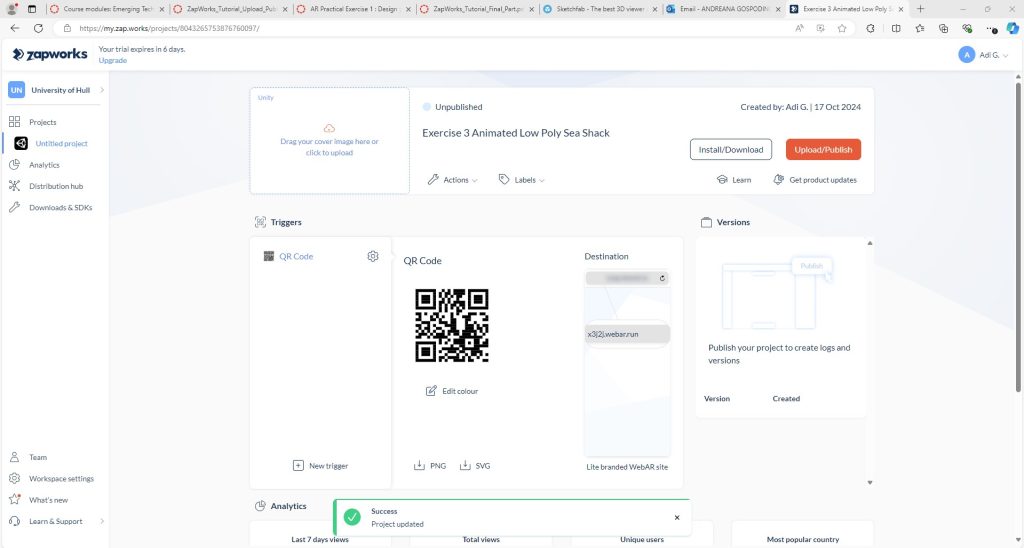

AR Practical Exercise 2 – Uploading and Publishing AR (Augmented Reality) Creation:

The following step involved preparing the augmented reality project for web upload via Zapworks. After building the AR file, I needed to compress it into a ZIP file to ensure it could be uploaded as a single package.

The series of screenshots below illustrates the development process of the Augmented Reality workshop from lab exercise 2.

AR Practical Exercise 3 – Animated Content and Custom Content:

Incorporating Animated Assets

The final phase involved adding animated assets to the Unity scene. After completing this, I exported the build just as I had in the previous step and uploaded the final ZIP file to Zapworks.

The screenshots below show the progression of the Augmented Reality – Animated Content from lab exercise 3.

When I tested the project by scanning the QR code, the experience didn’t go as expected. Instead of viewing the 3D model, only the image from the assets appeared on my screen. I’m still unsure why the model failed to load correctly. Unfortunately, I couldn’t capture the testing process with screenshots or images because my phone battery died during the attempt. I’ll need to troubleshoot the issue and retest the setup.

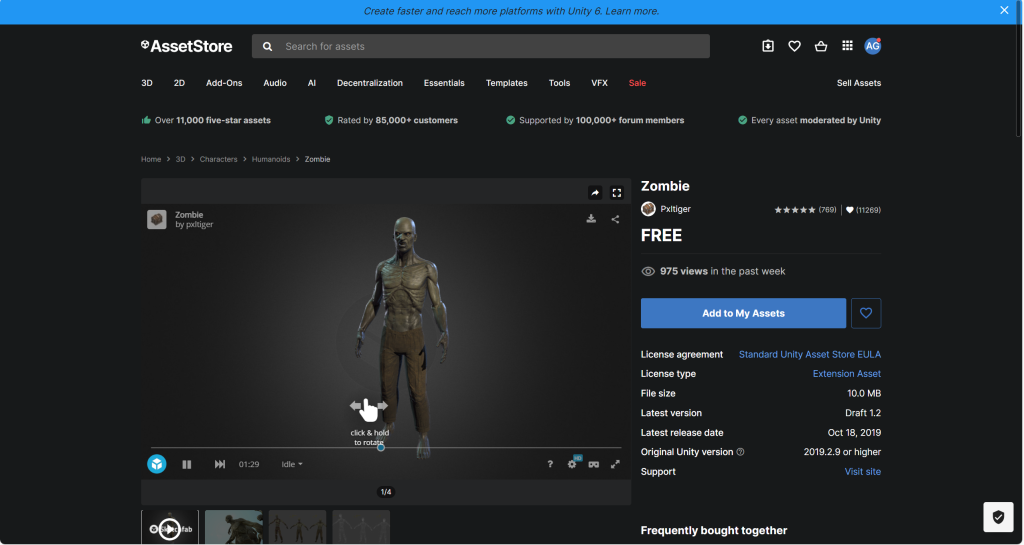

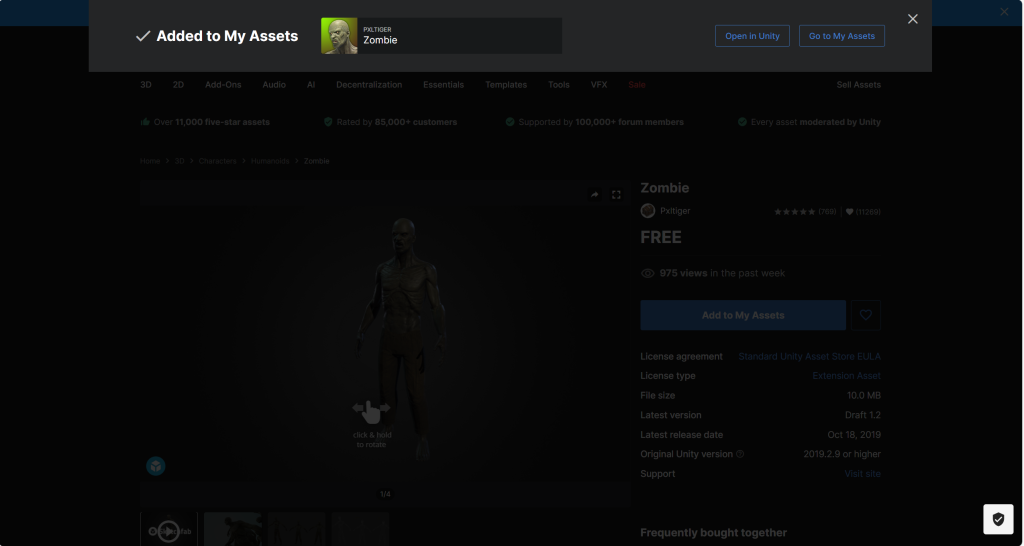

Experimenting with Zapworks and Unity’s WebGL for AR Design

After completing the initial Zapworks and Unity tutorials, I chose to incorporate a 3D asset into my augmented reality (AR) experience to explore more advanced integration techniques. For this experiment, I selected a low-poly zombie model from Unity’s Asset Store due to its manageable file size and suitability for AR applications. Following the procedures outlined in Tutorial 1, I successfully uploaded the model into Unity and implemented it in the AR environment.

However, upon attempting to build the WebGL scene, the process failed, resulting in an error displayed in Figure 6.6. Addressing this issue is necessary to finalize the Image Tracking Experience, as successful WebGL deployment is essential for a smooth AR experience across platforms. Resolving this error will likely involve troubleshooting settings within Unity and ensuring compatibility with WebGL.

The screenshots below illustrate each stage of development for this AR design exercise, highlighting the steps involved in the custom content lab exercise 3 for augmented reality.

Considerations for Using Adobe Aero

Pros of Adobe Aero:

- Seamless Integration with Adobe Creative Cloud: Easily works with other Adobe apps like Photoshop and Illustrator, streamlining the design process.

- Intuitive Gestures: Allows for simple asset manipulation using gestures like pinch, rotate, and drag, making it user-friendly.

- Supports App Clips: Enables faster loading of AR experiences without requiring full app downloads, improving accessibility.

- Interactive Content: Offers behavioral triggers that can create engaging and dynamic AR experiences, such as objects reacting to user interactions.

Cons of Adobe Aero:

- Limited to iOS: Currently only available for iOS devices, which restricts its accessibility to a broader audience.

- Lacks Advanced Features: Compared to other AR platforms, Adobe Aero is more basic and may not offer the level of complexity some projects require.

- Potential Stability Issues: Users have reported occasional bugs and crashes, which could affect the reliability of the platform during development.

As I move forward with my project, I will explore both tools during the development stage to determine which one best aligns with my needs. Adobe Aero’s simplicity may be perfect for quick, engaging experiences, while Unity might offer more potential if I decide to incorporate more complex interactions or expand the scope of my AR project.

Final Thoughts:

This initial exploration into augmented reality only scratches the surface of its potential, and I recognize that further research is needed to fully understand how to leverage it. I’m excited to integrate AR into my emerging tech project, and I’m now considering an idea of creating an interactive portfolio magazine.

Using Adobe Aero, I could design a magazine where users scan different pages, triggering AR content like 3D models or animated designs to appear. For instance, a scanned image of a product could display a rotating 3D version with clickable hotspots that provide additional information. Adobe Aero’s built-in features make it easier to import 3D objects and create animations without the need for extensive coding, which is why it might be more suited to this project compared to Unity.

This AR-enhanced magazine could be a powerful tool for client presentations, blending physical and digital elements to showcase my work in an engaging and innovative way. As I move forward, I will explore both options, but Adobe Aero seems particularly promising for bringing this idea to life.

Reference list:

Aircada. (2024) Exclusive Comparison: Adobe Aero vs Unity – Who Wins?. [Blog Post]. Aircada. 2 September. Exclusive Comparison: Adobe Aero vs Unity – Who Wins? – Aircada Blog [Accessed 16 Oct 2024].

CNBC International News (2019) L’Oreal’s augmented reality acquisition helps with online brand experience | Marketing Media Money. [Video]. (9) L’Oreal’s augmented reality acquisition helps with online brand experience | Marketing Media Money – YouTube [Accessed 15 Oct 2024].

GameSpot (2017) Pokémon GO – Legendary Trailer. [Video]. Advanced AI Capabilities (youtube.com) [Accessed 15 Oct 2024].

Harder, J. (2023) How Is A Game Like Pokemon Go An Example Of Augmented Reality. [Blog Post]. Robots.Net. 2 August. How Is A Game Like Pokemon Go An Example Of Augmented Reality | Robots.net [Accessed 15 Oct 2024].

Rock Paper Reality. (2023) Augmented Reality in the Beauty & Cosmetics Industry. [Blog Post]. Rock Paper Reality. 14 November. Augmented Reality (AR) In the Beauty & Cosmetics Industry (rockpaperreality.com) [Accessed 16 Oct 2024].

Stitch Low-Poly – Download Free 3D model by eloiriel (@eloiriel) [d630fdc] (sketchfab.com)